Image credit: Liu et al.

Image credit: Liu et al.Abstract

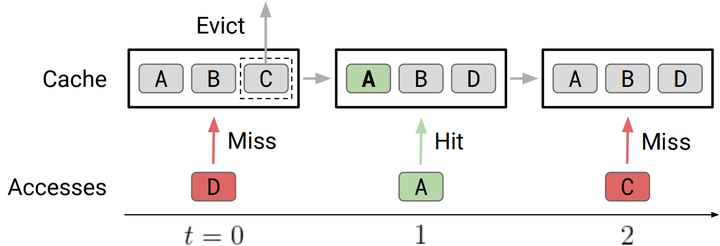

Effective caching is crucial for performance of modern-day computing systems. A key optimization problem arising in caching–which item to evict to make room for a new item–cannot be optimally solved without knowing the future. There are many classical approximation algorithms for this problem, but more recently researchers started to successfully apply machine learning to decide what to evict by discovering implicit input patterns and predicting the future. While machine learning typically does not provide any worst-case guarantees, the new field of learning-augmented algorithms proposes solutions which leverage classical online caching algorithms to make the machine-learned predictors robust. We are the first to comprehensively evaluate these learning-augmented algorithms on real-world caching datasets and state-of-the-art machine-learned predictors. We show that a straightforward method–blindly following either a predictor or a classical robust algorithm, and switching whenever one becomes worse than the other–has only a low overhead over a well-performing predictor, while competing with classical methods when the coupled predictor fails, thus providing a cheap worst-case insurance.